Intro

Please welcome former Director of AI at Tesla, Andrej Karpathy. [Music] Hello. [Music] Wow, a lot of people here, hello.

I'm excited to be here today to talk to you about software in the era of AI. I'm told that many of you are students pursuing bachelor's, master's, or PhD degrees and are about to enter the industry. I think it's an extremely unique and interesting time to do so because software is fundamentally changing again.

I say "again" because I have given this talk before, but the problem is that software keeps changing, which provides me with a lot of new material. The change is quite fundamental; software has not changed much at this level for 70 years, but it has changed rapidly about twice in the last few years. Consequently, there is a huge amount of work to do and a vast amount of software to write and rewrite.

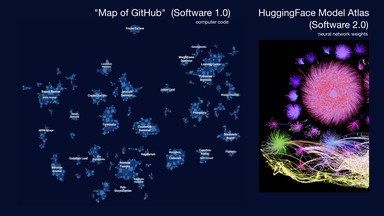

Let's take a look at the realm of software. If we think of this as a map of software, there is a cool tool called Map of GitHub that visualizes all the software that's been written. These are instructions for the computer to carry out tasks in the digital space.

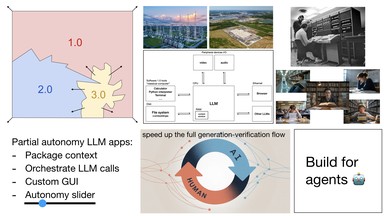

Software evolution: From 1.0 to 3.0

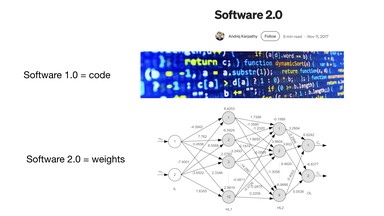

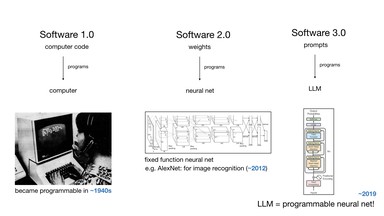

The idea was that Software 1.0 is the code you write for a computer, while Software 2.0 is neural networks—specifically, the weights of a neural network—specifically, the weights of a neural network. You don't write this code directly; instead, you tune datasets and run an optimizer to create the neural net's parameters. At the time, neural nets were seen as just a different kind of classifier, like a decision tree, so this framing was appropriate.

Now, we have an equivalent of GitHub in the realm of Software 2.0, which is Hugging Face. There is also Model Atlas where you can visualize the code. For example, the point in the middle of the giant circle represents the parameters of Flux, the image generator. Anytime someone tunes a Lora on top of a Flux model, they create a 'git commit' in this space, resulting in a different kind of image generator.

So, Software 1.0 is computer code that programs a computer, while Software 2.0 are the weights that program neural networks. Here is an example of the AlexNet image recognizer neural network.

So far, all the neural networks we've been familiar with were fixed-function computers, like image-to-category classifiers. The fundamental change is that neural networks became programmable with large language models. I see this as a new kind of computer, worth designating as Software 3.0.

Programming in English: Rise of Software 3.0

Your prompts are now programs that program the LLM, and remarkably, these prompts are written in English. To summarize the difference: for sentiment classification, you could write Python code, train a neural net, or prompt a large language model. Here, a few-shot prompt can be changed to program the computer in a slightly different way.

We now have Software 1.0, Software 2.0, and a growing category of new code. You may have seen that a lot of GitHub code is no longer just code; it has English interspersed with it. Not only is this a new programming paradigm, but it's also remarkable that it's in our native language.

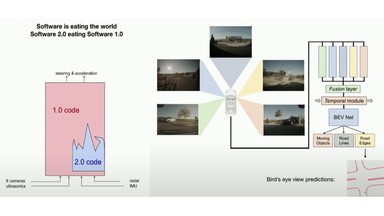

When this blew my mind a few years ago, I tweeted about it, and it captured the attention of many people. My currently pinned tweet is: "Remarkably, we're now programming computers in English." When I was at Tesla working on the autopilot, we were trying to get the car to drive.

I showed a slide where the car's inputs go through a software stack to produce steering and acceleration. I observed that the autopilot had a ton of C++ (Software 1.0) and some neural nets for image recognition. Over time, as we improved the autopilot, the neural network grew in capability, and the C++ code was deleted.

Functionality originally written in 1.0, like stitching information from different cameras across time, was migrated to 2.0. The Software 2.0 stack quite literally ate the 1.0 stack. I thought this was remarkable, and we're seeing the same thing again.

A new kind of software is eating through the stack. We have three different programming paradigms, and if you're entering the industry, it's a good idea to be fluent in all of them because they each have pros and cons. You need to decide whether to use 1.0, 2.0, or 3.0 for a given functionality, and potentially transition between them.

LLMs as utilities, fabs, and operating systems

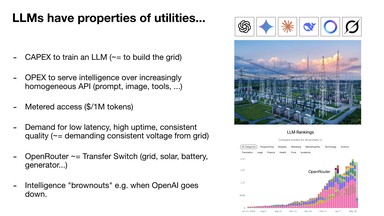

Now, I want to discuss LLMs and how to think about this new paradigm and its ecosystem. I was struck by a quote from Andrew Ng many years ago, who said, "AI is the new electricity." This captures something interesting, as LLMs certainly feel like they have properties of utilities right now. LLM labs like OpenAI, Google, and Anthropic spend CAPEX to train LLMs, similar to building out a power grid.

They then have OPEX to serve that intelligence via APIs, with metered access where we pay per million tokens. We have utility-like demands for this API: low latency, high uptime, and consistent quality. In the same way an electricity transfer switch lets you change power sources, services like OpenRouter let you switch between different types of LLMs.

Since LLMs are software, they don't compete for physical space, so having multiple providers is not an issue. It's fascinating that when the state-of-the-art LLMs go down, it's like an intelligence brownout for the world. The planet gets dumber as our reliance on them grows.

However, LLMs don't only have properties of utilities; they also have properties of semiconductor fabs. The CAPEX required to build an LLM is very large, so we are in a world with deep tech trees and R&D secrets centralizing inside these labs. The analogy is a bit muddled because software is less defensible due to its malleability.

You can make many analogies. For instance, a 4-nanometer process node is like a cluster with certain max flops. Using NVIDIA GPUs without designing the hardware is like the fabless model, whereas Google building its own hardware for TPUs is like Intel's model of owning the fab.

However, the analogy that makes the most sense is that LLMs have strong parallels to operating systems. They are not simple commodities like electricity; they are increasingly complex software ecosystems. The ecosystem is even shaping up similarly, with a few closed-source providers (like Windows or macOS) and an open-source alternative (like Linux).

The new LLM OS and historical computing analogies

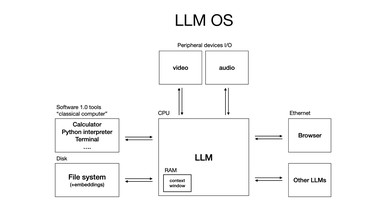

For LLMs, we have a few competing closed-source providers, and the Llama ecosystem is a close approximation to what might grow into a Linux-like alternative. It's still early, but we are seeing these systems become more complicated, involving tool use and multimodality. When I realized this, I sketched it out: the LLM is like a new operating system, acting as the CPU, with the context window as memory, orchestrating memory and compute for problem-solving.

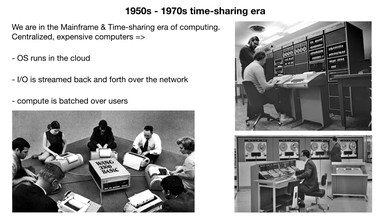

For example, you can download an app like VS Code and run it on Windows, Linux, or Mac. Similarly, you can take an LLM app like Cursor and run it on GPT, Claude, or Gemini—it's just a dropdown menu. Another analogy is that we are in a 1960s-ish era where LLM compute is still very expensive, forcing it to be centralized in the cloud.

We are all thin clients interacting with it over the network, using time-sharing because none of us has full utilization of these computers. This is what computing used to look like, with operating systems in the cloud, batching, and streamed data. The personal computing revolution hasn't happened yet because it's not economical.

Some people are trying, and it turns out that Mac Minis are a good fit for some of the LLMs because batch-one inference is memory-bound. These might be early indications of personal computing, but it's unclear what this will look like or who will invent it.

One more analogy: whenever I talk to an LLM directly in text, I feel like I'm talking to an operating system through the terminal. A general-purpose GUI for LLMs hasn't been invented yet. While specific apps have GUIs, there isn't one that works across all tasks.

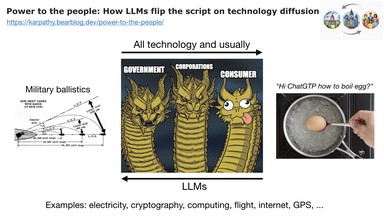

LLMs are different from early operating systems in one unique way: they flip the direction of technology diffusion. Typically, technologies like electricity, computing, and the internet were first used by governments and corporations because they were new and expensive, only later diffusing to consumers. With LLMs, it's the other way around.

Early computers were for military ballistics, but LLMs are helping me with tasks like boiling an egg. It's fascinating that this magical new computer is not primarily helping governments with secret technology; instead, corporations and governments are lagging behind consumer adoption. This reversal informs how we should think about the first applications for this technology.

In summary, while LLM labs is accurate language, it's better to see LLMs as complicated operating systems, currently in their circa-1960s phase, distributed like a utility via time-sharing. What's unprecedented is that they are not just in the hands of a few powerful entities but in the hands of everyone with a computer. ChatGPT was beamed down to billions of people overnight, which is insane. Now is our time to enter the industry and program these remarkable computers.

Psychology of LLMs: People spirits and cognitive quirks

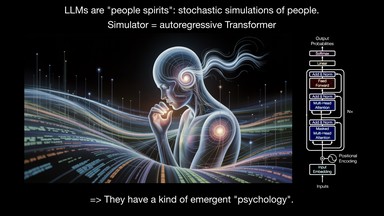

Before we do, we must understand their psychology. I like to think of LLMs as 'people spirits'—stochastic simulations of people, where the simulator is an autoregressive Transformer.

This simulator is fit to all the text on the internet, and because it's trained on human data, it has an emergent, human-like psychology. The first thing you'll notice is their encyclopedic knowledge and memory, far exceeding any single human's. It reminds me of the movie Rain Man, where the protagonist is an autistic savant with a nearly perfect memory.

LLMs are similar; they can easily remember things like SHA hashes, giving them superpowers in some respects. However, they also have cognitive deficits. They hallucinate, lack a good internal model of self-knowledge, and display jagged intelligence, meaning they are superhuman in some domains but make mistakes no human would. You can trip on these rough edges.

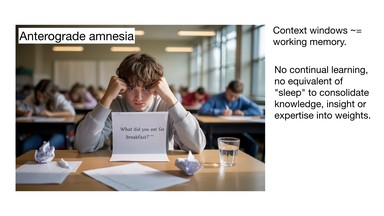

They also suffer from a form of anterograde amnesia. A human coworker learns and consolidates knowledge over time, but LLMs don't natively do this, as context windows are just working memory.

This trips people up. For a cultural reference, watch Memento or 50 First Dates, where the protagonists' memories are wiped clean each day. LLMs also have security limitations; they are gullible, susceptible to prompt injection, and might leak your data.

In short, we have to work with this superhuman tool that also has significant cognitive deficits. The challenge is to program them effectively, working around their deficits while leveraging their powers.

Designing LLM apps with partial autonomy

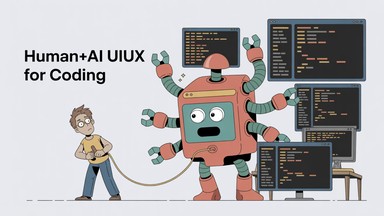

This brings me to the opportunities for using these models, which is not a comprehensive list, but just some things I found interesting. I'm particularly excited about what I would call 'partial autonomy apps.' For coding, you could copy-paste code and bug reports into a chat interface, but why go directly to the operating system? It makes more sense to use a dedicated app like Cursor.

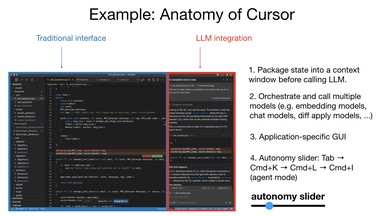

Cursor is a great example of an early LLM app that has many useful properties. It provides a traditional interface for manual work but adds LLM integration for handling larger chunks of work. A key feature of such apps is that they manage context and orchestrate multiple calls to different LLMs, such as embedding models and chat models that apply diffs to your code.

Another underappreciated feature is the application-specific GUI. You don't want to interact with the OS in plain text, which is hard to interpret. It's much better to see a diff as a red and green change and accept or reject it with a keystroke, allowing a human to audit the work of these fallible systems much faster.

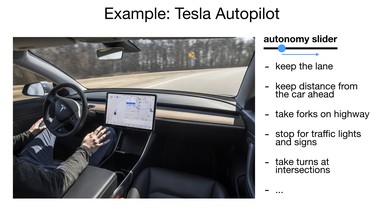

I will come back to this point later. The last feature I want to point out is the 'autonomy slider.' In Cursor, you can use simple tab-completion, edit a selected chunk of code, change an entire file, or let it operate on the whole repository. You are in charge of the level of autonomy depending on the task's complexity.

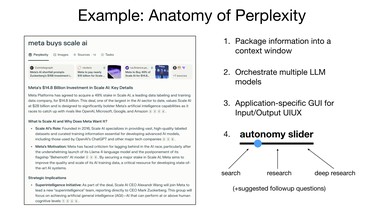

Perplexity is another successful LLM app with similar features. It packages information, orchestrates LLMs, has a GUI for auditing sources, and includes an autonomy slider for quick searches or deep research. This leads to my question: I feel like a lot of software will become partially autonomous.

I'm trying to think through what that looks like. For those of you who maintain products, how will you make them partially autonomous? Can an LLM see and act in all the ways a human can, and how can humans supervise and stay in the loop with these fallible systems?

What does a diff look like in Photoshop? Also, a lot of traditional software with its switches and controls designed for humans will have to change to become accessible to LLMs.

The importance of human-AI collaboration loops

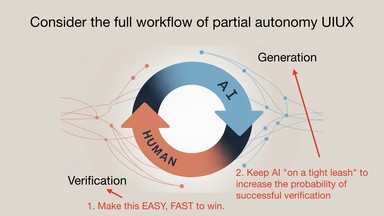

One thing that doesn't get enough attention with LLM apps is that we are cooperating with AIs.They generate, and we verify, so it's in our interest to make this loop as fast as possible. This can be done by speeding up verification—GUIs are great for this because they utilize our visual processing.

We have to keep AI on a leash. Many people are too excited about AI agents. It's not useful to get a 1,000-line diff to a repo, because I am still the bottleneck who has to verify it for bugs and security issues. We have to keep the AI on a leash because it can be overreactive. When I'm AI-assisted coding, it's not great to have an overreactive agent doing all this kind of stuff.

I'm trying to develop ways to utilize these agents in my coding workflow. I am always wary of getting overly large diffs, so I work in small, incremental chunks to spin the verification loop quickly. Many of you are likely developing similar ways of working with LLMs.

I've also seen blog posts that try to develop best practices for working with LLMs, and one I read recently discussed techniques for keeping the AI on a leash. For example, a vague prompt may lead to an undesirable result, causing verification to fail and forcing you to start over. It makes more sense to be concrete in your prompts to increase the probability of a successful verification.

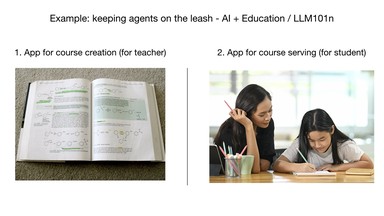

Many of us will end up finding techniques like this. In my own work, I'm currently interested in what education looks like now that we have AI and LLMs. A large amount of my thought goes into how we keep the AI on the leash.

I don't think it works to just go to a chat and say, "Hey, teach me physics," because the AI gets lost in the woods. For me, this is actually two separate apps: one for a teacher to create courses, and another that takes those courses and serves them to students. In both cases, we now have this intermediate artifact of a course that is auditable.

We can make sure it's good and consistent, and the AI is kept on a leash with respect to a certain syllabus and progression of projects. This is one way of keeping the AI on a leash and has a much higher likelihood of working.

Lessons from Tesla Autopilot & autonomy sliders

I'm no stranger to partial autonomy, having worked on it for five years at Tesla. This is also a partial autonomy product and shares a lot of the features, like the GUI of the autopilot right in the instrument panel. It's showing me what the neural network sees, and we have the autonomy slider where we did more and more autonomous tasks for the user over my tenure. The first time I drove a self-driving vehicle was in 2013.

I had a friend who worked at Waymo, and he offered to give me a drive around Palo Alto; I took a picture using Google Glass at the time. We got into this car and went for a 30-minute drive, and the drive was perfect with zero interventions. This was in 2013, which is now over 10 years ago.

It struck me because at the time, this perfect demo made me feel like self-driving was imminent. Yet here we are, still working on autonomy and driving agents. Even now, we haven't really solved the problem.

You may see Waymos going around that look driverless, but there's still a lot of teleoperation and human-in-the-loop for much of this driving. We still haven't declared success, but it's definitely going to succeed at this point. It just took a long time, and software is tricky in the same way that driving is tricky.

So when I see things like, "2025 is the year of agents," I get very concerned. This is the decade of agents, and it's going to be quite some time. We need humans in the loop and to do this carefully; let's be serious here.

The Iron Man analogy: Augmentation vs. agents

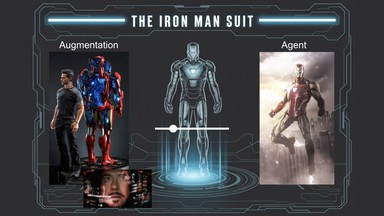

One more analogy that I always think through is the Iron Man suit. I always love Iron Man, and I think it's so correct in a bunch of ways with respect to technology and how it will play out. What I love about the Iron Man suit is that it's both an augmentation that Tony Stark can drive, and it's also an agent. In some of the movies, the Iron Man suit is quite autonomous and can fly around and find Tony.

This is the autonomy slider: we can build augmentations or we can build agents, and we want to do a bit of both. At this stage, working with fallible LLMs, I would say you want to build more Iron Man suits than Iron Man robots. It's less about building flashy demos of autonomous agents and more about building partial autonomy products.

These products have custom GUIs and UI/UX so that the generation-verification loop of the human is very fast. We are not losing sight of the fact that it is, in principle, possible to automate this work. There should be an autonomy slider in your product, and you should be thinking about how you can slide that and make your product more autonomous over time. There's lots of opportunity in these kinds of products.

Vibe Coding: Everyone is now a programmer

I want to now switch gears and talk about one other dimension that I think is very unique. Not only is there a new type of programming language that allows for autonomy in software, but as I mentioned, it's programmed in English. This natural interface suddenly makes everyone a programmer because everyone speaks a natural language like English.

This is extremely bullish, very interesting, and completely unprecedented. It used to be the case that you needed to spend five to 10 years studying something to be able to do something in software, but that's no longer the case. I don't know if by any chance anyone has heard of 'vibe coding.'

This is the tweet that introduced this, but I'm told that this is now a major meme. Fun story about this: I've been on Twitter for like 15 years and I still have no clue which tweet will become viral and which will fizzle. I thought this tweet was going to be the latter, but it became a total meme.

I really just can't tell, but I guess it struck a chord and it gave a name to something that everyone was feeling but couldn't quite say in words. Now there's a Wikipedia page and everything; this is like a major contribution now.

Tom Wolf from Hugging Face shared this beautiful video that I really love. These are kids vibe coding, and I find that this is such a wholesome video. How can you look at this video and feel bad about the future? The future is great.

I think this will end up being a gateway drug to software development, and I'm not a doomer about the future of the generation. I tried vibe coding a little bit as well because it's so fun. Vibe coding is so great when you want to build something super custom that doesn't appear to exist and you just want to wing it on a Saturday.

So I built this iOS app, and I can't actually program in Swift, but I was really shocked that I was able to build a super basic app. I'm not going to explain it, it's really dumb, but this was just a day of work and it was running on my phone later that day. I was like, "Wow, this is amazing."

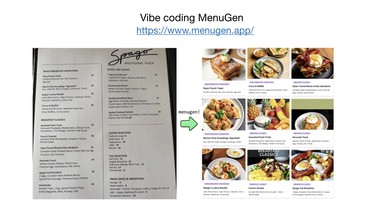

I didn't have to read through Swift for five days to get started. I also vibe-coded this app called Menu-Gen, and this is live, you can try it at menu-gen.app. I basically had this problem where I show up at a restaurant, read the menu, and I have no idea what any of the things are and I need pictures.

Since this doesn't exist, I decided to code it. You go to menu-gen.app, you take a picture of a menu, and then menu-gen generates the images. Everyone gets $5 in credits for free when you sign up, and therefore this is a major cost center in my life; this is a negative-revenue app for me right now.

I've lost a huge amount of money on Menu-Gen. The fascinating thing about Menu-Gen for me is that the vibe coding part was actually the easy part. Most of the work was when I tried to make it real, so that you can actually have authentication, payments, the domain name, and Vercel deployment.

This was really hard, and all of this was not code; all of this DevOps stuff was me in the browser clicking stuff. This was extremely slow and took another week. It was really fascinating that I had the Menu-Gen demo working on my laptop in a few hours, and then it took me a week because I was trying to make it real, which was just really annoying.

For example, if you try to add Google login to your web page, there's a huge amount of instructions from this Clerk library telling me how to integrate it. It's telling me to go to this URL, click on this drop-down, choose this, and click on that. A computer is telling me the actions I should be taking. You do it! Why am I doing this? I had to follow all these instructions; it was crazy.

Building for agents: Future-ready digital infrastructure

So I think the last part of my talk therefore focuses on: can we just build for agents? I don't want to do this work; can agents do this?

Roughly speaking, I think there's a new category of consumer and manipulator of digital information. It used to be just humans through GUIs or computers through APIs. Now we have a completely new thing: agents. They're computers, but they are human-like 'people spirits' on the internet, and they need to interact with our software infrastructure. Can we build for them?

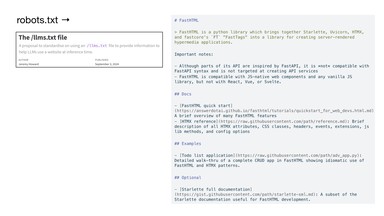

For example, you can have robots.txt on your domain to advise web crawlers on how to behave. In the same way, you can have maybe lm.txt, which is just a simple markdown that's telling LLMs what this domain is about. This is very readable to an LLM.

If it had to instead get the HTML of your web page and try to parse it, this is very error-prone and difficult. We can just directly speak to the LLM; it's worth it.

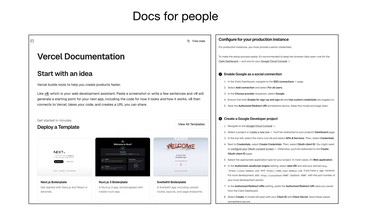

A huge amount of documentation is currently written for people, with lists, bolding, and pictures that are not directly accessible by an LLM.

I see some services now are transitioning their docs to be specifically for LLMs. Vercel and Stripe, as an example, are early movers here, and they offer their documentation in markdown. Markdown is super easy for LLMs to understand.

As a simple example from my experience, maybe some of you know 3Blue1Brown; he makes beautiful animation videos on YouTube. I love the library he wrote, Manim, and I wanted to make my own animation. There's extensive documentation on how to use it, and I didn't want to actually read through it.

So I copy-pasted the whole thing to an LLM, I described what I wanted, and it just worked out of the box. The LLM just vibe-coded me an animation of exactly what I wanted, and I was amazed. If we can make docs legible to LLMs, it's going to unlock a huge amount of use, and I think this is wonderful.

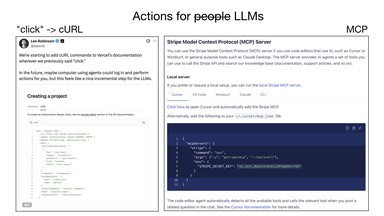

The other thing I wanted to point out is that you do unfortunately have to change the docs, not just put them in markdown. Anytime your docs say "click," this is bad, as an LLM will not be able to natively take this action right now. Vercel, for example, is replacing every occurrence of "click" with an equivalent cURL command that an agent could take on your behalf.

I think this is very interesting. Then, of course, there's the Model Context Protocol from Anthropic, which is another protocol for speaking directly to agents as this new consumer and manipulator of digital information. I'm very bullish on these ideas.

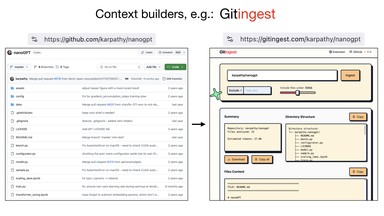

The other thing I really like is a number of little tools that are helping ingest data in very LLM-friendly formats. For example, when I go to a GitHub repo, when I go to a GitHub repo, I can't feed this to an LLM and ask questions about it because it's a human interface. When you just change the URL from GitHub to 'gitingest', it will actually concatenate all the files into a single giant text file. It will create a directory structure, and this is ready to be copy-pasted into your favorite LLM.

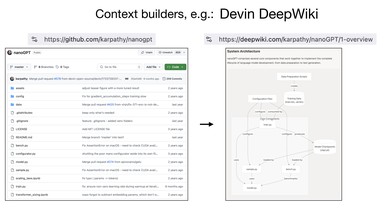

A maybe even more dramatic example of this is Deep-Wiki, which is from Devin. It's not just the raw content of these files; Devin basically does an analysis of the GitHub repo and builds up whole documentation pages just for your repo.

You can imagine that this is even more helpful to copy-paste into your LLM. I love all the little tools that basically where you just change the URL and it makes something accessible to an LLM. It is absolutely possible that in the future, LLMs will be able to go around and click stuff.

But I still think it's very worth meeting LLMs halfway and making it easier for them to access this information, because this is still fairly expensive and a lot more difficult to use. I do think that for a long tail of software, we will need these tools for apps that don't adapt. But for everyone else, it's very worth meeting in some middle point. I'm bullish on both, if that makes sense.

Summary: We’re in the 1960s of LLMs — time to build

So in summary, what an amazing time to get into the industry. We need to rewrite a ton of code, which will be written by professionals and by coders. These LLMs are like utilities and fabs, but they're especially like operating systems, albeit from the 1960s.

A lot of the analogies cross over. These LLMs are fallible 'people spirits' that we have to learn to work with, and to do that properly, we need to adjust our infrastructure. When you're building these LLM apps, I described some of the ways of working effectively with them.

I described some of the tools that make that possible and how you can spin this loop very quickly to create partial autonomy products. A lot of code also has to be written for the agents more directly.

But in any case, going back to the Iron Man suit analogy, I think what we'll see over the next decade is us taking the slider from left to right.

It's going to be very interesting to see what that looks like. I can't wait to build it with all of you. Thank you.